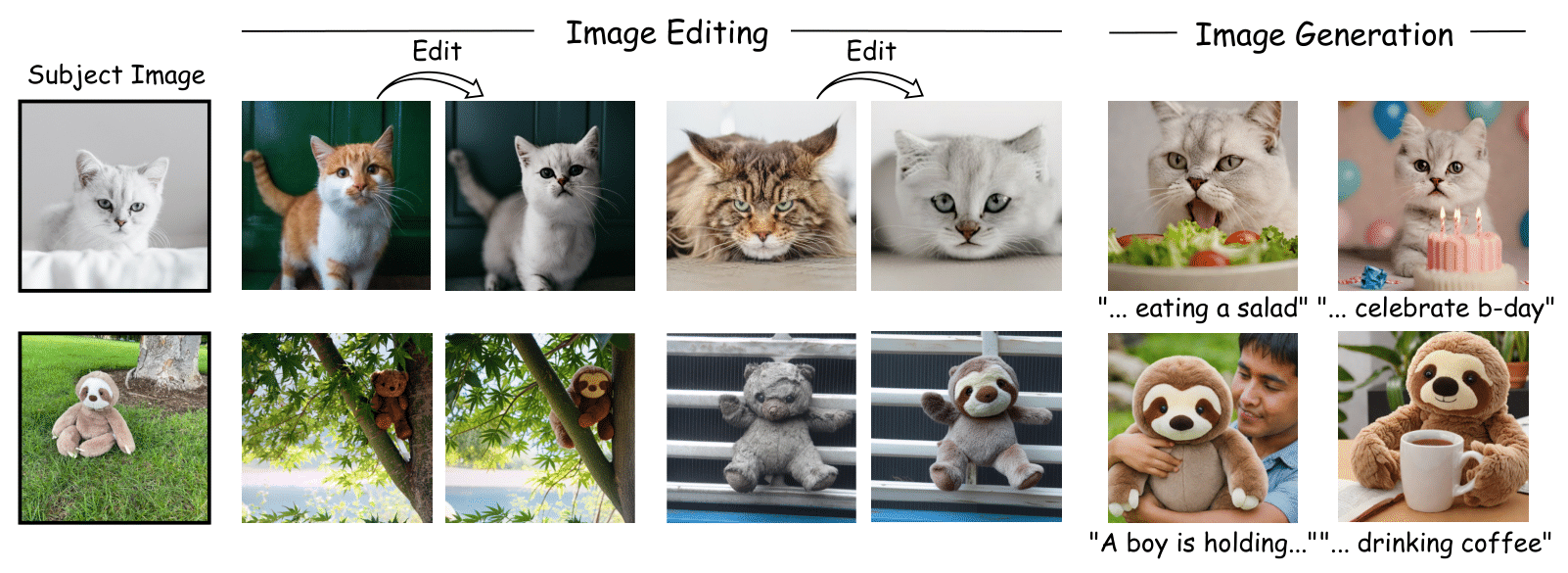

Personalizing image generation and editing is particularly challenging when we only have a few images of the subject, or even a single image. A common approach to personalization is concept learning, which can integrate the subject into existing models relatively quickly, but produces images whose quality tends to deteriorate quickly when the number of subject images is small. Quality can be improved by pre-training an encoder, but training restricts generation to the training distribution, and is time consuming. It is still an open hard challenge to personalize image generation and editing from a single image without training. Here, we present SISO, a novel, training-free approach based on optimizing a similarity score with an input subject image. More specifically, SISO iteratively generates images and optimizes the model based on loss of similarity with the given subject image until a satisfactory level of similarity is achieved, allowing plug-and-play optimization to any image generator. We evaluated SISO in two tasks, image editing and image generation, using a diverse data set of personal subjects, and demonstrate significant improvements over existing methods in image quality, subject fidelity, and background preservation.

Subject Image

A photo of a dog

Prompt

Output

Subject Image

Input Image

Output

Subject Image

A photo of a chair

Prompt

Output

Subject Image

Input Image

Output

Subject Image

A photo of a cat

Prompt

Output

Subject Image

Input Image

Output

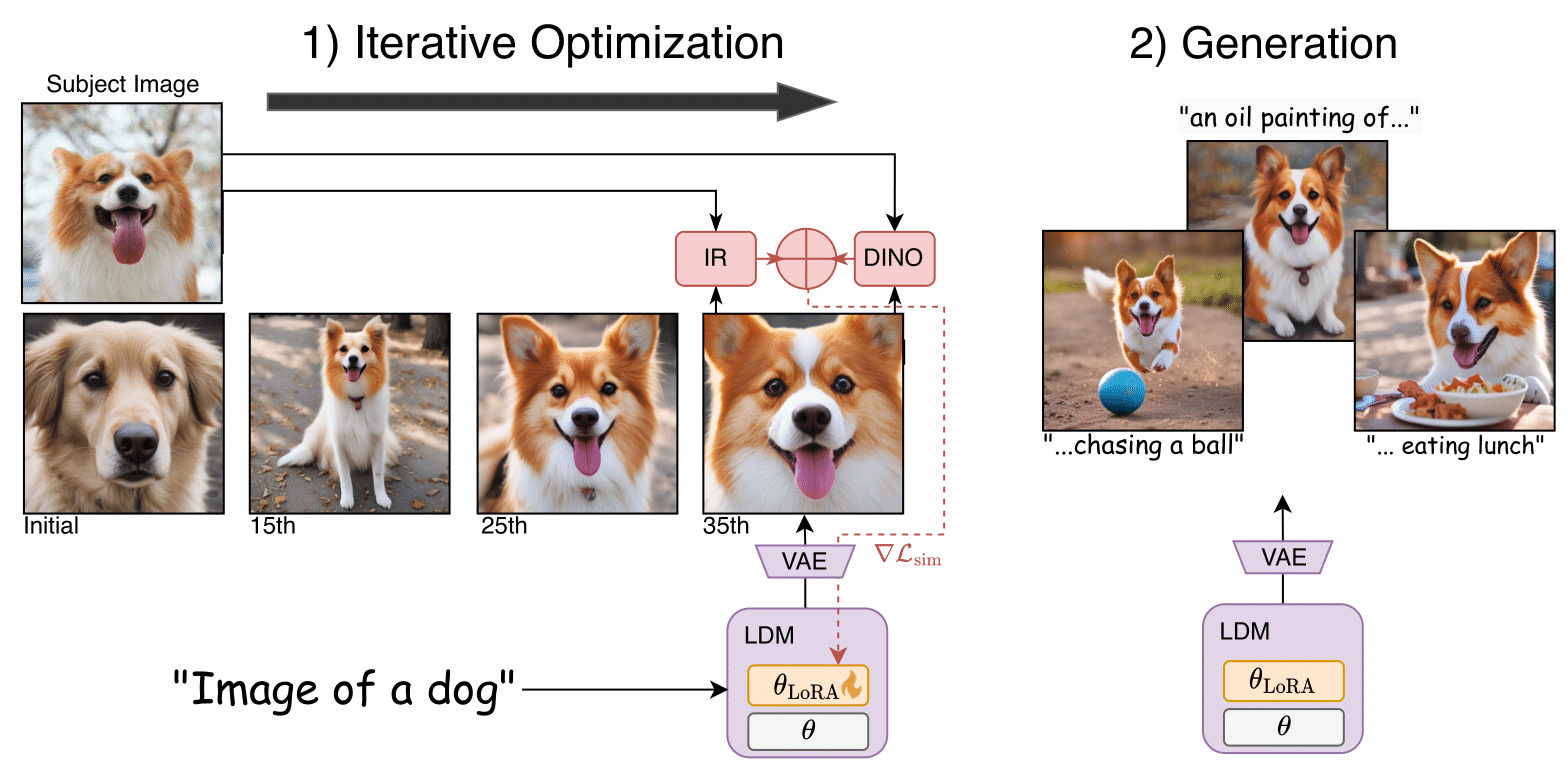

Our method generates images by iteratively optimizing based on pre-trained identity metrics IR and DINO. The added LoRA parameters are updated at each step, while the rest of the models remain frozen.

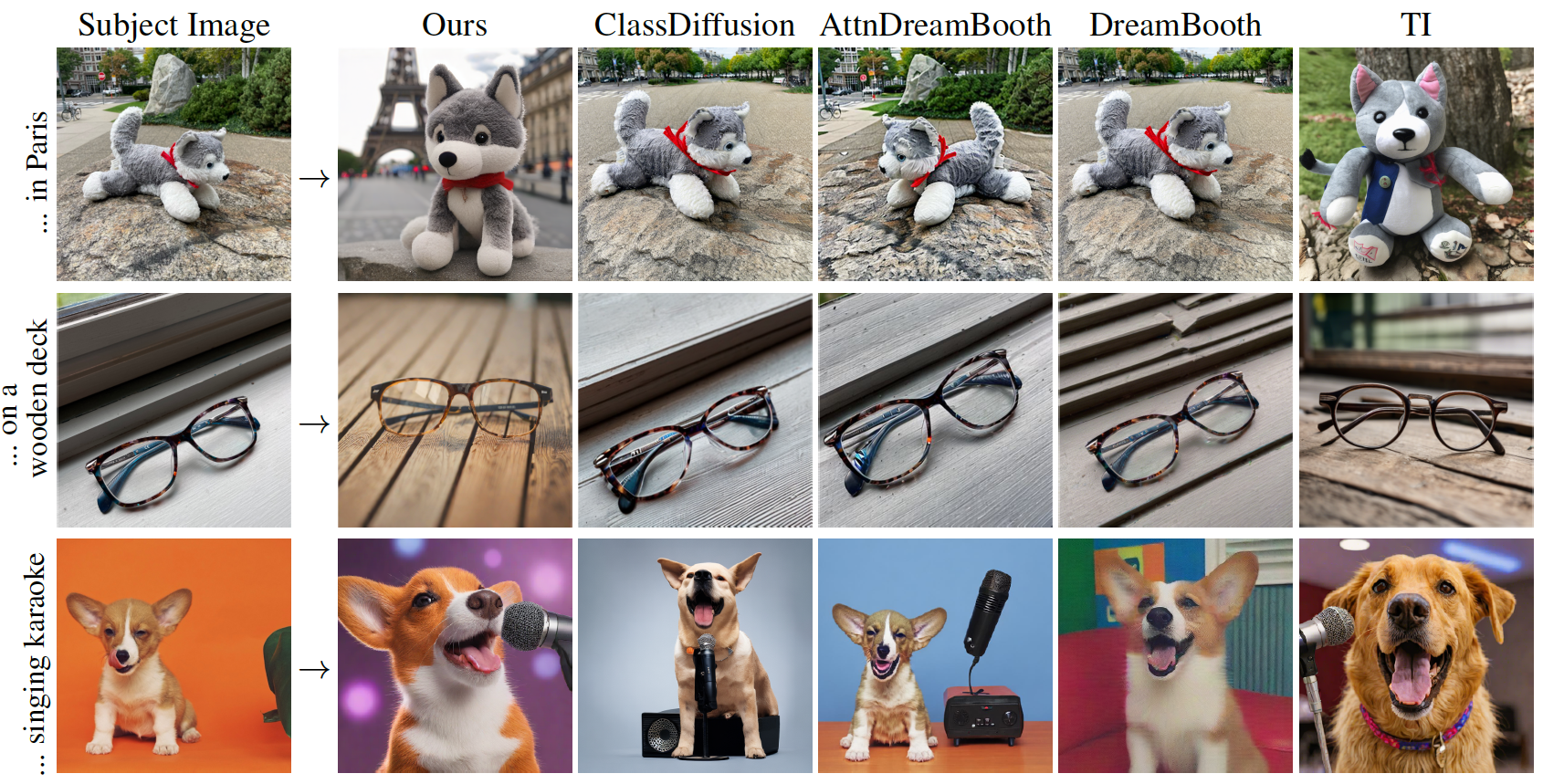

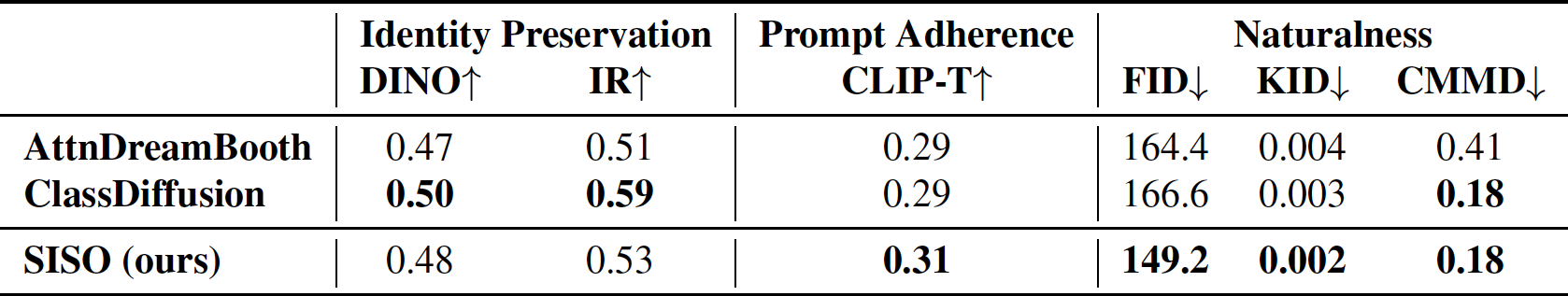

For image generation, we find that optimizing with a simple prompt is effective, since the optimized model generates novel images of the subject without further optimization, even with complex prompts, as shown on the right.

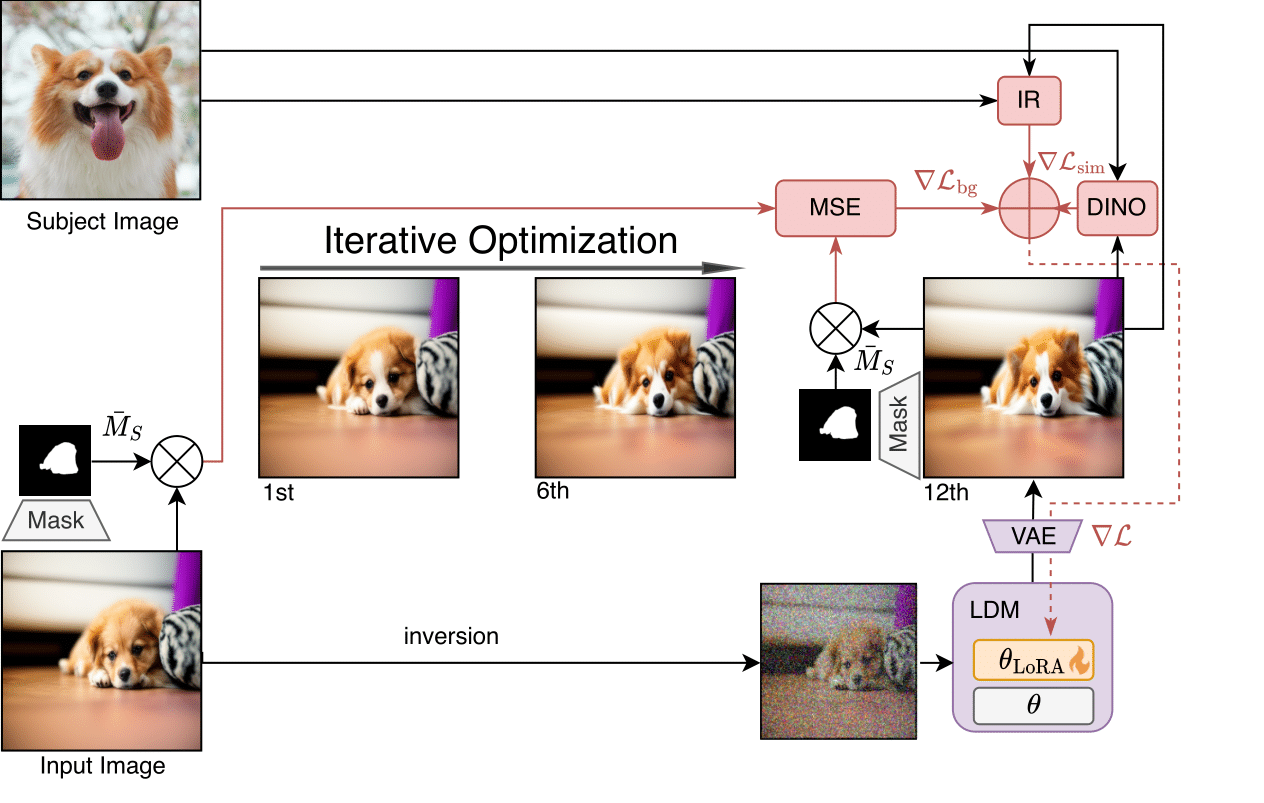

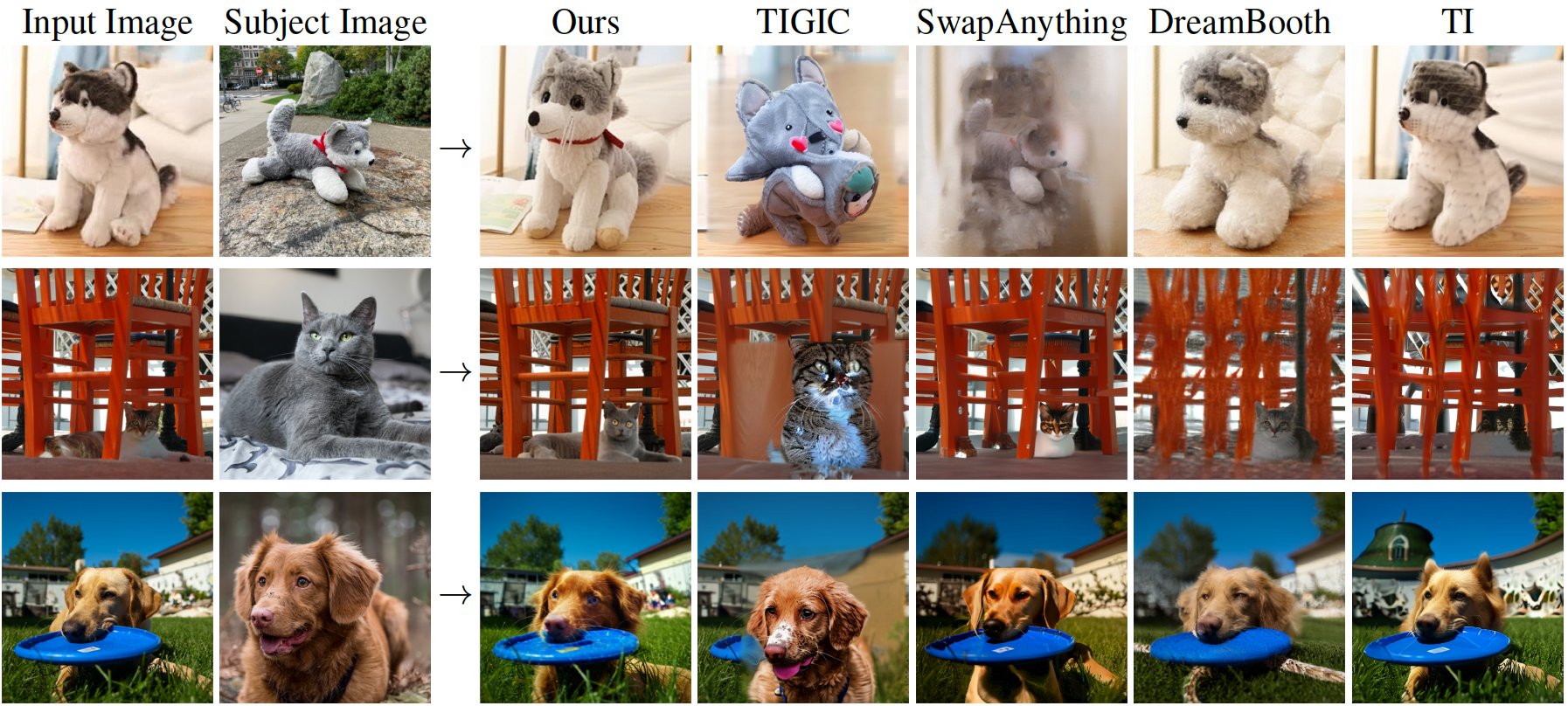

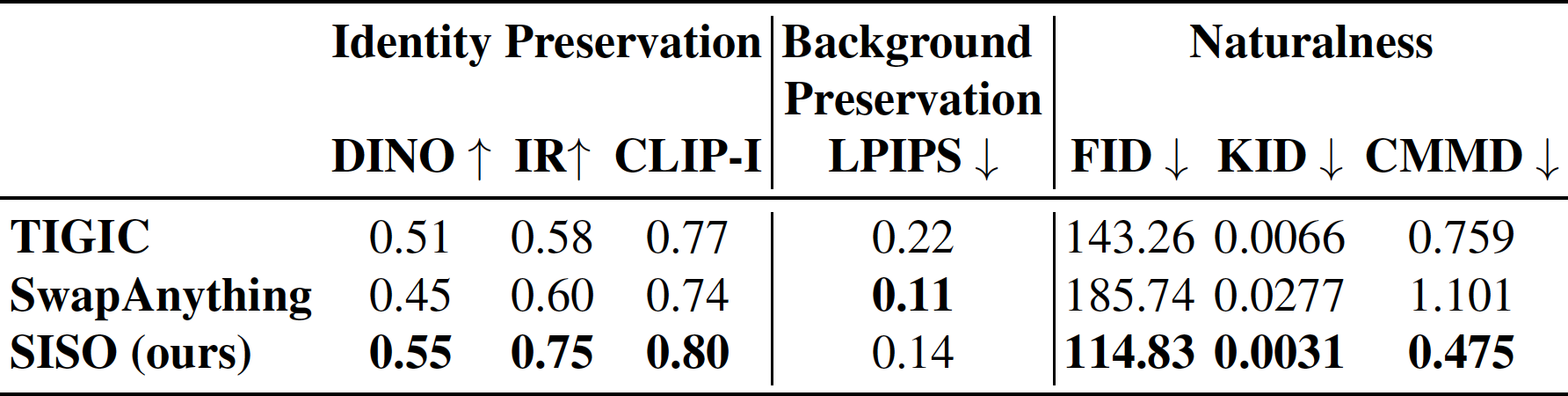

For image editing, we use diffusion inversion to map the input image to a latent. We also add a background preservation regularization term.

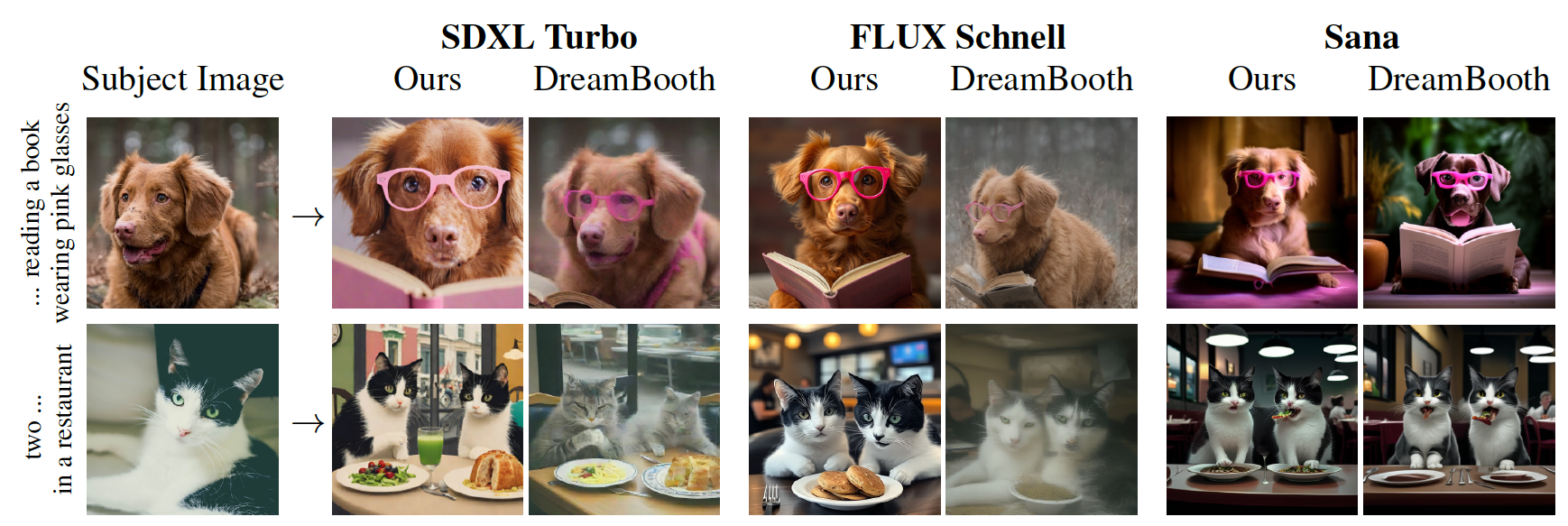

Our method is plug-and-play, allowing for easy integration with any image generator. We demonstrate this by integrating our method with SDXL Turbo, FLUX Schnell and Sana.

@article{shpitzer2025siso,,

author = {Shpitzer, Yair and Chechik, Gal and Schwartz, Idan},

title = {Single Image Iterative Subject-driven Generation and Editing},

journal = {arXiv preprint arXiv:2503.16025},

year = {2025},

}